Photo by Martin Shreder on Unsplash

Big O Notation and Scalability: Maximizing Performance in Your Code

Unlocking the Secrets of Time and Space Complexity for Efficient Algorithm Design

What Is Good Code?

High-quality code demonstrates cleanliness, efficiency, reliability, and scalability. It adheres to coding standards, gracefully handles errors, and is designed to accommodate growing data or user demands. It incorporates techniques such as optimizing algorithms and data structures, minimizing time and space complexity, and embracing the principles of Big O notation.

Talk about Big O notation, in this article we will specifically discuss the scalability of our algorithm, how to make it works properly and not take too much time complexity so and so fort

Big O and Scalability

Big O Notation is a valuable tool for evaluating algorithm efficiency and scalability. It enables us to understand how an algorithm's performance changes as the input size increases. Algorithms with lower Big O complexities are more scalable, making them better suited for handling larger data sets or increasing workloads.

The Big-O Complexity Chart serves as a valuable tool for comparing and assessing the performance of different algorithms, particularly in terms of their scalability with varying input sizes. Algorithms with lower time complexity, such as O(1), O(log n), or O(n), are represented by flatter or shallower growth curves on the chart, indicating superior scalability. Conversely, algorithms with higher time complexity, such as O(n^2), O(2^n), or O(n!), exhibit steeper growth curves, signifying limited scalability. This graphical representation enables developers and analysts to make informed decisions when selecting algorithms, taking into account their scalability requirements. By visually understanding how an algorithm's performance evolves as the input size increases, one can identify more efficient algorithmic solutions tailored to specific scenarios.

When we talk about Big O and scalability of code, we simply mean when we grow bigger and bigger with our input how much does the algorithm or function or function become slow down? The less it slows down or the slower it slows down, the better it is.

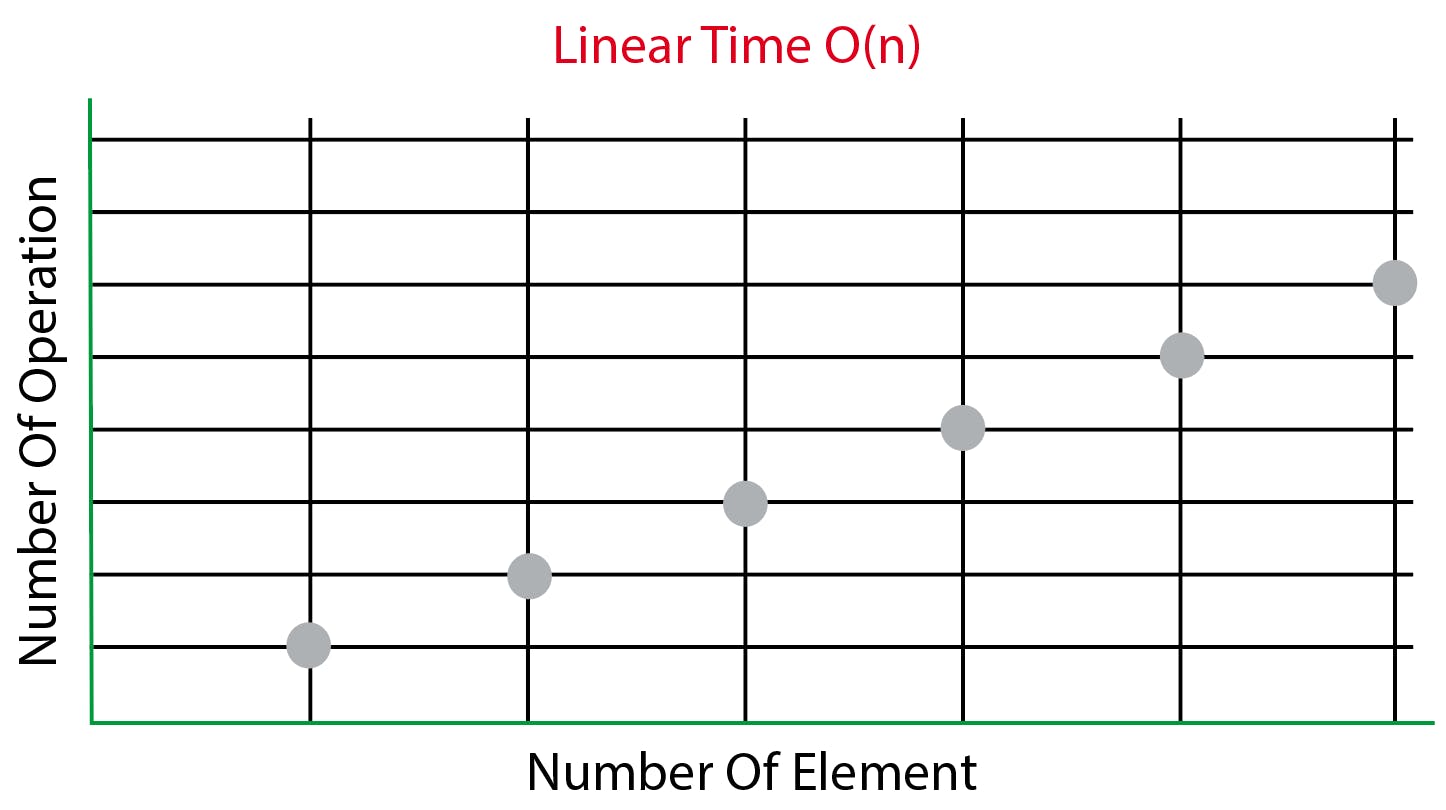

O (n) or Linear Time

O(n) or Linear Time complexity signifies an algorithm whose execution time scales proportionally with the input size. As the input grows, the algorithm's processing time increases linearly.

Consider the following JavaScript code example that demonstrates an O(n) algorithm:

function linearTimeAlgorithm(array) {

for (let i = 0; i < array.length; i++) {

// Perform an operation on each element

console.log(array[i]);

}

}

In the code snippet, the linearTimeAlgorithm function accepts an array as input and utilizes a for loop to iterate over each element. The loop iterates n times, where n represents the size of the input array. Thus, the time complexity of this algorithm is O(n), indicating a linear relationship between the input size and the execution time.

Irrespective of the array's length, the function operates on each element once, resulting in a linear growth pattern for the execution time.

It is worth noting that the provided code example presents a simplified illustration of O(n) complexity. In practice, the complexity can be influenced by additional factors, such as nested loops or other computations performed within the loop. Nonetheless, the fundamental characteristic of O(n) complexity remains intact, indicating a linear correlation between the input size and the algorithm's execution time.

O (1) or Constant Time

O(1) or Constant Time complexity refers to an algorithm that exhibits a consistent and predictable execution time, regardless of the size of the input. It means that as the input grows larger, the algorithm's performance remains unaffected, offering a constant level of efficiency.

Let's consider a simple JavaScript code snippet that exemplifies an O(1) algorithm:

function constantTimeAlgorithm(array) {

// Perform an operation on the first element

console.log(array[0]);

}

In the provided code, the constantTimeAlgorithm function takes an array as input and operates specifically on the first element. Regardless of how many elements the array contains, the function accesses and processes the first element directly, without any dependence on the array's size. As a result, the execution time of the algorithm remains constant.

This constant execution time characterizes the algorithm's time complexity as O(1), where the "O" notation represents the order of growth. It signifies that the algorithm's efficiency remains constant, regardless of changes in the input size.

Achieving constant time complexity often relies on efficient data structures or specific optimizations that allow for direct access and processing of the required element. Algorithms with O(1) complexity offer predictable and consistent performance, making them highly desirable for scenarios that demand reliable and efficient execution, irrespective of the input size.

O(n^2) or Quadratic Time

O(n^2) or Quadratic Time complexity represents an algorithm whose execution time grows quadratically with the size of the input. As the input size increases, the algorithm's running time increases exponentially, resulting in a quadratic growth pattern. This complexity is often associated with algorithms involving nested iterations or comparisons of all possible pairs of elements.

Let's examine a JavaScript code example that illustrates an algorithm with O(n^2) complexity:

function quadraticTimeAlgorithm(array) {

for (let i = 0; i < array.length; i++) {

for (let j = 0; j < array.length; j++) {

// Perform an operation or comparison

console.log("Operation performed for elements:", array[i], array[j]);

}

}

}

In the provided code, the quadraticTimeAlgorithm function accepts an array as input. It contains two nested loops that iterate over the array elements. The inner loop executes an operation or comparison on each element in combination with every other element in the array.

As a consequence, the total number of operations grows quadratically with the input size, leading to a time complexity of O(n^2). For an array of size n, both loops iterate n times, resulting in a total of n * n or n^2 iterations.

It is essential to note that algorithms with quadratic time complexity can become inefficient for larger input sizes. The execution time increases rapidly as the input size grows, making them less suitable for handling scalability.

When dealing with algorithms exhibiting O(n^2) complexity, it is advisable to explore more efficient algorithmic approaches or optimize the code to reduce the number of iterations or comparisons.

Understanding the time complexity of an algorithm aids in evaluating its efficiency and scalability, enabling informed decisions regarding algorithm selection and optimization strategies.

Space complexity

Space complexity refers to the amount of memory or space required by an algorithm to solve a problem. It measures the additional space used by the algorithm in addition to the input. Analyzing space complexity is essential for evaluating memory usage and optimizing algorithm efficiency.

Let's consider a JavaScript code example to illustrate space complexity:

function sumNumbers(n) {

let sum = 0; // Constant space

for (let i = 1; i <= n; i++) {

sum += i; // Constant space

}

return sum;

}

In the given code, the sumNumbers function calculates the sum of numbers from 1 to n and returns the result. Now, let's analyze the space complexity:

The

sumvariable stores the sum of numbers and occupies a constant amount of space, regardless of the input sizen. It requires a fixed memory allocation, making it consume constant space (O(1)).The

ivariable in theforloop is used for iteration and holds the current iteration value. Similar tosum, it uses a constant amount of space (O(1)), as it does not depend on the input size.

Overall, the sumNumbers function exhibits a constant space complexity of O(1). It utilizes a fixed amount of memory that remains constant regardless of the input size.

Remember that space complexity may also involve additional data structures or variables used within an algorithm. It is important to consider their memory usage when analyzing space complexity.

Understanding the space complexity of an algorithm is crucial for assessing memory requirements and identifying potential scalability issues. By optimizing memory usage, developers can improve algorithm efficiency and overall performance.